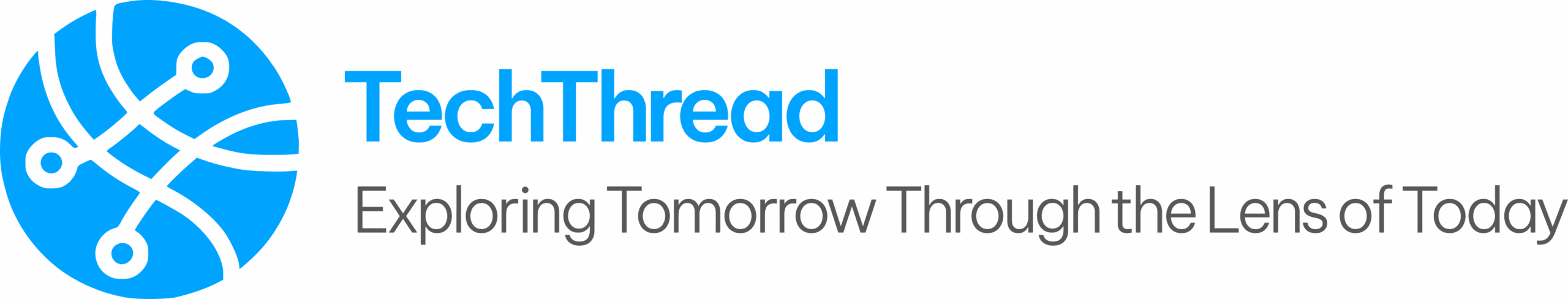

The air was thick with the hum of servers, a digital heartbeat echoing the dawn of a new era. We stood on the precipice of unimaginable power, with models like the rumored GPT-5 promising leaps in intelligence we could barely comprehend. But whispered through the data streams was a chilling question: what happens when this power falls into the wrong hands?

It’s not a hypothetical scenario from a sci-fi blockbuster. The reality of AI cybersecurity vulnerabilities is already here, a silent battlefield where digital skirmishes are fought daily. And with every breakthrough in AI capabilities, the stakes escalate dramatically.

The Invisible War: Understanding AI Vulnerabilities

Imagine an intelligence so vast it could write compelling novels, devise complex scientific theories, or even manage critical infrastructure. Now, imagine that intelligence subtly corrupted, its outputs skewed, its logic hijacked. This isn’t just about data breaches; it’s about the very integrity of the AI itself.

The digital landscape is rife with vectors for attack. Traditional cybersecurity focuses on firewalls and encryption, protecting data at rest and in transit. But AI systems introduce a whole new paradigm of threats, targeting the unique ways these models learn, process, and generate information.

1. Data Poisoning: The Tainted Well

At its core, AI learns from data. If malicious actors inject corrupted or biased data into an AI’s training set, the model will learn these flaws. This is like poisoning a well from which an entire town drinks.

Impact: A self-driving car AI trained on poisoned data might misidentify road signs. A medical diagnostic AI could provide incorrect diagnoses. The consequences can be catastrophic, leading to real-world harm and eroding trust in the AI system.

2. Adversarial Attacks: The Art of Deception

These are arguably the most fascinating and terrifying AI cybersecurity vulnerabilities. Adversarial attacks involve subtle, often imperceptible, alterations to input data that cause an AI model to misclassify or misinterpret. Think of it as an optical illusion designed specifically for an AI.

For instance, a few strategically placed pixels on a stop sign could make a sophisticated vision AI identify it as a “yield” sign. These attacks highlight how differently AI “sees” the world compared to humans and pose a significant threat to critical applications.

3. Model Extraction and Theft: The Digital Heist

Proprietary AI models represent massive investments in research and development. Malicious actors can attempt to “steal” these models by querying them repeatedly and reverse-engineering their logic and architecture. This isn’t just about intellectual property; it’s about gaining access to a powerful tool or understanding its weaknesses for future attacks.

The race to develop powerful AI means that a stolen model could give a competitor or hostile entity a significant, unethical advantage.

4. Prompt Injection: The Art of Subversion

As large language models (LLMs) like those anticipated to power future iterations such as a theoretical GPT-5 become more prevalent, prompt injection emerges as a critical concern. This involves crafting specific inputs that trick the AI into ignoring its pre-programmed safety guidelines or performing actions it wasn’t intended to. It’s a form of social engineering, but for algorithms.

For example, an attacker might bypass content filters or extract sensitive information by cleverly framing questions. This attack vector directly challenges the ethical boundaries and safety mechanisms built into advanced AI systems.

The Looming Shadow of GPT-5 (and Beyond)

The conversation around GPT-5, whether it’s already here or still on the horizon, underscores a fundamental truth: as AI models grow more powerful, their potential for misuse amplifies. A more capable AI is also a more appealing target. Consider the implications if an advanced AI model were to be compromised:

- Misinformation at Scale: An LLM capable of generating highly convincing narratives could be weaponized to spread propaganda or fake news, impacting elections, public opinion, or financial markets.

- Automated Cyberattacks: Imagine an AI that could autonomously identify vulnerabilities and execute complex cyberattacks with unprecedented speed and precision.

- Erosion of Trust: If the public loses faith in the reliability and safety of AI systems, adoption will plummet, hindering technological progress and societal benefits.

As we discussed in a previous post, the very notion of how we perceive AI—as a tool or a companion—is deeply intertwined with our trust in its integrity. Read more about AI User Expectations: Tool or Companion?.

“How Did They Do It?” – The Hypothetical Blueprint for Compromise

While there’s no publicly confirmed “hack” of a specific, unreleased model like GPT-5, we can extrapolate from existing AI cybersecurity vulnerabilities and common attack methodologies to understand how such a compromise could occur.

-

Exploiting API Vulnerabilities: Many advanced AI models are accessed via Application Programming Interfaces (APIs). If these APIs have weak authentication, authorization flaws, or are susceptible to denial-of-service attacks, they become entry points. A breach here could grant unauthorized access to the model, its outputs, or even its underlying infrastructure.

-

Targeting the Training Pipeline: The most impactful attacks often occur during the data collection and training phases. If a system’s data ingestion pipeline is unsecured, data poisoning becomes a viable route. Similarly, if the training environment itself is compromised, an attacker could manipulate model parameters or inject backdoors.

-

Reverse Engineering and Side-Channel Attacks: Even if a model isn’t directly stolen, clever attackers can deduce its architecture, training data characteristics, or even sensitive parameters by carefully observing its outputs or monitoring resource consumption (side-channel attacks). This information can then be used to craft more effective adversarial attacks or create a functional, albeit illegal, replica. The increasing complexity of AI also raises questions about its performance under duress, a topic explored in AI Model Performance: Why Your ChatGPT Seems ‘Dumber’.

-

Social Engineering and Insider Threats: No technology is entirely immune to the human element. Phishing campaigns targeting AI developers, engineers, or data scientists can provide credentials that unlock access to sensitive systems. Disgruntled insiders, with their intimate knowledge of system architecture, pose a significant risk, regardless of how robust technical defenses are.

The Race to Secure Our AI Future

The increasing sophistication of AI models demands an equally sophisticated approach to security. This isn’t just a reactive measure; it requires proactive, integrated strategies:

- Robust Data Governance: Ensuring the integrity and security of training data is paramount. This includes rigorous validation, anomaly detection, and secure storage practices.

- Secure Model Deployment: AI models must be deployed in hardened environments, with strict access controls, continuous monitoring, and prompt patching of vulnerabilities.

- Adversarial Training: One promising defense involves training AI models on adversarial examples to make them more robust against such attacks. This teaches the AI to recognize and resist deceptive inputs.

- Explainable AI (XAI): Developing AI systems whose decision-making processes are transparent and auditable can help identify and mitigate malicious manipulations.

- Ethical AI Development: Prioritizing security and ethical considerations from the very inception of an AI project is crucial. This proactive mindset can prevent many vulnerabilities before they even emerge. Sam Altman’s own fears about superintelligent AI highlight the critical need for responsible development.

- Industry Collaboration: The pace of AI innovation means that no single entity can tackle these challenges alone. Collaborative research and information sharing among companies, academia, and governments are essential to building collective defenses. The AI paradox of immense potential versus unforeseen risks underscores this need for collaboration and foresight. Learn more about navigating this complex balance in The AI Paradox: Navigating Innovation & Uncertainty.

As we continue to push the boundaries of artificial intelligence, understanding and addressing AI cybersecurity vulnerabilities will be as important as the breakthroughs themselves. The future of AI hinges not just on its intelligence, but on its resilience. The constant feedback loop in AI development must increasingly incorporate security insights to build truly robust systems.

What Are Your Thoughts?

The security of our most advanced AI systems is a shared responsibility. What do you believe is the biggest challenge in securing AI? How do you think we can best prepare for the inevitable evolution of AI threats? Share your insights and join the conversation in the comments below!